Paper

Abdulrahman Kerim, Ufuk Celikcan, Erkut Erdem, and Aykut Erdem. "Using Synthetic Data for Person Tracking Under AdverseWeather Conditions", Image and Vision Computing.

Preprint (with low-res images) | Published Version

Supplementary Material | Bibtex

Abstract

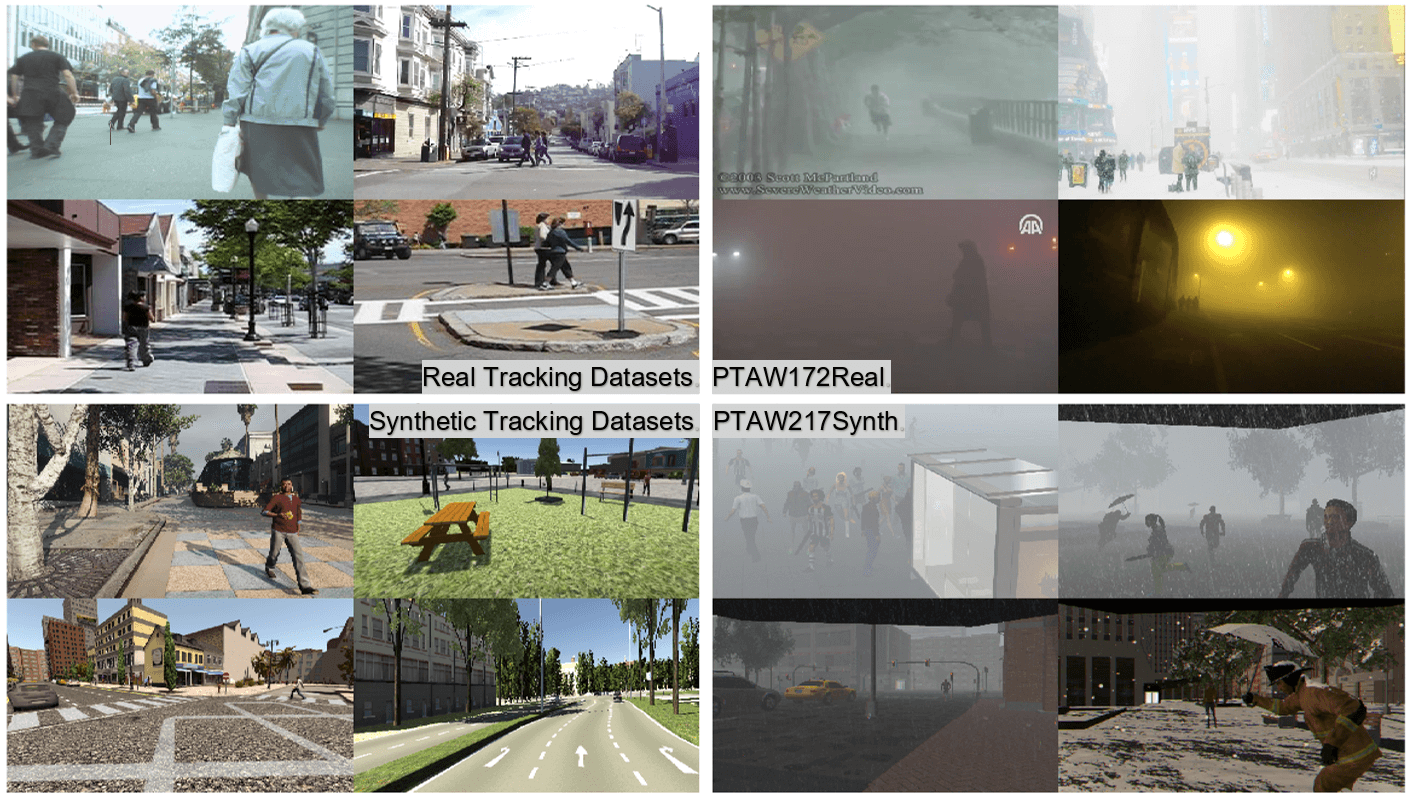

Robust visual tracking plays a vital role in many areas such as autonomous cars, surveillance and robotics. Recent trackers were shown to achieve adequate results under normal tracking scenarios with clear weather condition, standard camera setups and lighting conditions. Yet, the performance of these trackers, whether they are correlation filter-based or learning-based, degrade under adverse weather conditions. The lack of videos with such weather conditions, in the available visual object tracking datasets, is the prime issue behind the low performance of the learning-based tracking algorithms. In this work, we provide a new person tracking dataset of real-world sequences (PTAW172Real) captured under foggy, rainy and snowy weather conditions to assess the performance of the current trackers. We also introduce a novel person tracking dataset of synthetic sequences (PTAW217Synth) procedurally generated by our NOVA-Extended framework spanning the same weather conditions in varying severity to mitigate the problem of data scarcity. Our experimental results demonstrate that the performances of the state-of-the-art deep trackers under adverse weather conditions can be boosted when the available real training sequences are complemented with our synthetically generated dataset during training.