Paper

Ali Egemen Taşören, Ufuk Celikcan. "NOVAction23: Addressing the Data Diversity Gap by Uniquely Generated Synthetic Sequences for Real-World Human Action Recognition", Computers & Graphics (2024).

Preprint

Scripts: MMaction2 (for reproduction of results, use the version 0.24.1)

Abstract

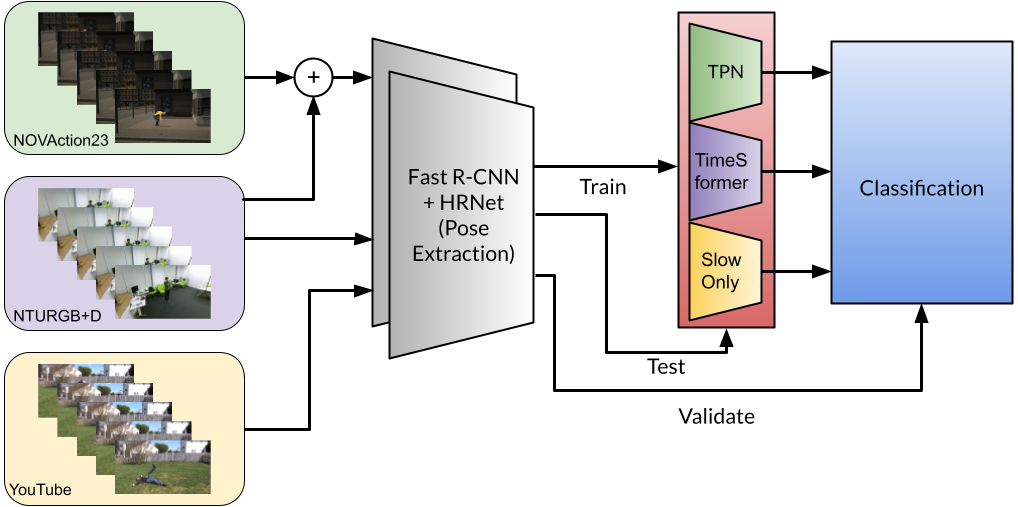

Recognition of human actions through the utilization of machine learning techniques requires the provision of substantial datasets for the training process to achieve satisfactory results. However, obtaining such data can prove to be a challenge, as it is costly to acquire subjects and time-consuming to record, pre-process, and label videos individually. Furthermore, a majority of existing human action datasets are recorded within indoor environments. Synthetic data is often employed to circumvent these difficulties, yet the currently available synthetic data lacks both photorealism and diversity in its features. We propose the NOVAction engine, a tool that possesses capabilities to generate diverse, photorealistic data in both indoor and outdoor settings. Subsequently, we utilize the NOVAction engine to create the NOVAction23 dataset and train classifiers utilizing both the proposed dataset and existing state-of-the-art data. Our results are further validated through the use of real-world videos sourced from YouTube. The findings confirm that the NOVAction23 dataset can enhance the performance of state-of-the-art video classification for human action recognition.