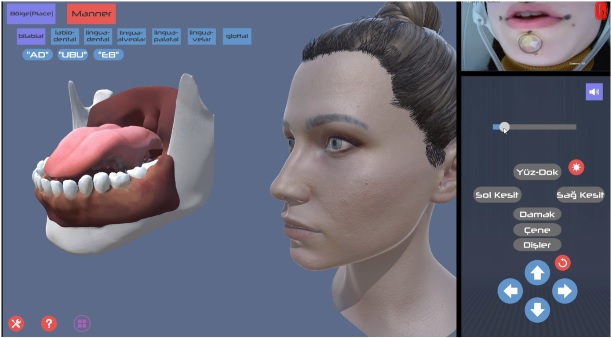

Monitoring Vocal Tract and Face Movements for 3D Modeling and Animation For Turkish

It is very important to obtain accurate data about the movements and shapes of vocal tract and articulator organs during speech in terms of phonetic studies. This data is the basis for the treatment of speech disorders. In recent years, the use of MRI has become very important in studies about speech production. During speaking, dynamic information about the vocal tract and the movement of the articulator organs can be collected using MRI. The acoustic noise generated during the acquisition of MRI data makes it difficult to record the acoustic signal (speaking voice produced by the person) and to synchronize with the MR image. In addition, the recordings obtained with MRI include vocal tract and articulator organs, which normally do not show any external movement changes during speech only. However, with the MR microphone, microphone-camera synchronization set and MR-compatible sound-music system to be used in this study, it is ensured that acoustic signal is obtained simultaneously with image and acoustic noise suppression. By mounting 2 MR Bore cameras on the MRI device, movement changes of the mouth and around the mouth can also be recorded simultaneously during speaking. Under the Motion Capture (MOCAP) cameras, markers will be placed on the person's face and face movements will be recorded during speaking. While using MRI and MOCAP, target Turkish sounds and words will be recorded by observing the changes in motion and shape that occur. Data from MRI and MOCAP records will be processed for 3D modeling and animation of vocal tract and articulator organs. The 3D modeling and animation that is planned for Turkish and the speech sounds used in Turkish with the movement and shape changes that are revealed during speech will be very important about the production of Turkish speech sounds. This project will be a basic and indispensable project for this and related topic studies.

Grant No: 117E183

Principal Investigators: Asst. Prof. Dr. Maviş Emel KULAK KAYIKÇI

Co-Investigators: Prof. Dr. Haşmet GÜRÇAY, Dr. Serdar ARITAN, Dr. Ayça KARAOSMANOĞLU