Structure-Preserving Image Smoothing via Region Covariances

Recent years have witnessed the emergence of new image smoothing techniques which have provided new insights and raised new questions about the nature of this well-studied problem. Specifically, these models separate a given image into its structure and texture layers by utilizing non-gradient based definitions for edges or special measures that distinguish edges from oscillations. In this study, we propose an alternative yet simple image smoothing approach which depends on covariance matrices of simple image features, aka the region covariances. The use of second order statistics as a patch descriptor allows us to implicitly capture local structure and texture information and makes our approach particularly effective for structure extraction from texture. Our experimental results have shown that the proposed approach leads to better image decompositions as compared to the state-of-the-art methods and preserves prominent edges and shading well. Moreover, we also demonstrate the applicability of our approach on some image editing and manipulation tasks such as image abstraction, texture and detail enhancement, image composition, inverse halftoning and seam carving.

Publication page

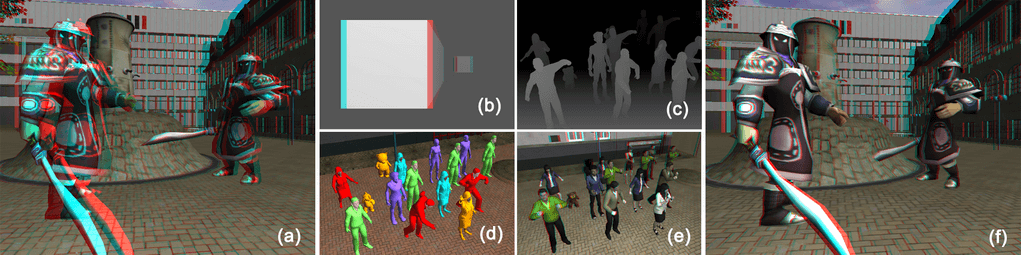

Attention-Aware Disparity Control in Interactive Environments

The paper introduces a novel approach for controlling stereo camera parameters in interactive 3D environments in a way that specifically addresses the interplay of binocular depth perception and saliency of scene contents. Our proposed Dynamic Attention-Aware Disparity Control (DADC) method produces depth-rich stereo rendering that improves viewer comfort through joint optimization of stereo parameters. While constructing the optimization model, we consider the importance of scene elements, as well as their distance to the camera and the locus of attention on the display. Our method also optimizes the depth effect of a given scene by considering the individual user’s stereoscopic disparity range and comfortable viewing experience by controlling accommodation/convergence conflict. We validate our method in a formal user study that also reveals the advantages, such as superior quality and practical relevance, of considering our method.

Publication pageThe State of the Art in HDR Deghosting: A Survey and Evaluation

Obtaining a high quality high dynamic range (HDR) image in the presence of camera and object movement hasbeen a long-standing challenge. Many methods, known as HDR deghosting algorithms, have been developedover the past ten years to undertake this challenge. Each of these algorithms approaches the deghosting problemfrom a different perspective, providing solutions with different degrees of complexity, solutions that range fromrudimentary heuristics to advanced computer vision techniques. The proposed solutions generally differ in twoways: (1) how to detect ghost regions and (2) what to do to eliminate ghosts. Some algorithms choose to completelydiscard moving objects giving rise to HDR images which only contain the static regions. Some other algorithmstry to find the best image to use for each dynamic region. Yet others try to register moving objects from differentimages in the spirit of maximizing dynamic range in dynamic regions. Furthermore, each algorithm may introducedifferent types of artifacts as they aim to eliminate ghosts. These artifacts may come in the form of noise, brokenobjects, under- and over-exposed regions, and residual ghosting. Given the high volume of studies conducted inthis field over the recent years, a comprehensive survey of the state of the art is required. Thus, the first goal of thispaper is to provide this survey. Secondly, the large number of algorithms brings about the need to classify them.Thus the second goal of this paper is to propose a taxonomy of deghosting algorithms which can be used to groupexisting and future algorithms into meaningful classes. Thirdly, the existence of a large number of algorithmsbrings about the need to evaluate their effectiveness, as each new algorithm claims to outperform its precedents.Therefore, the last goal of this paper is to share the results of a subjective experiment which aims to evaluatevarious state-of-the-art deghosting algorithms.

Publication page

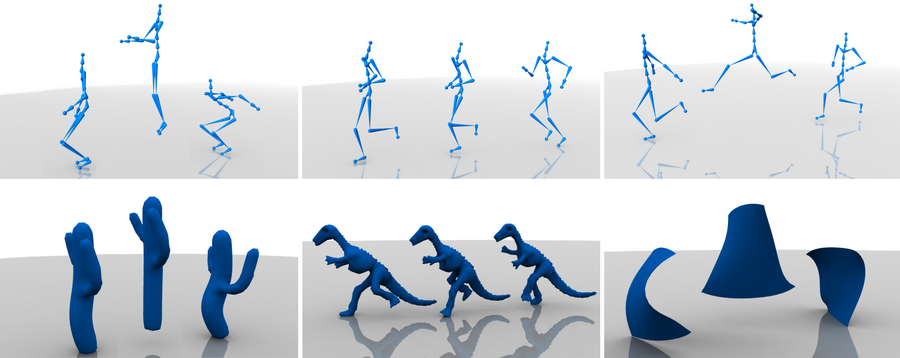

Example Based Retargeting of Human Motion to Arbitrary Mesh Models

We present a novel method for retargeting human motion to arbitrary 3D mesh models with as little user interaction as possible. Traditional motion-retargeting systems try to preserve the original motion, while satisfying several motion constraints. Our method uses a few pose-to-pose examples provided by the user to extract the desired semantics behind the retargeting process while not limiting the transfer to being only literal. Thus, mesh models with different structures and/or motion semantics from humanoid skeletons become possible targets. Also considering the fact that most publicly available mesh models lack additional structure (e.g. skeleton), our method dispenses with the need for such a structure by means of a built-in surface-based deformation system. As deformation for animation purposes may require non-rigid behaviour, we augment existing rigid deformation approaches to provide volume-preserving and squash-and-stretch deformations. We demonstrate our approach on well-known mesh models along with several publicly available motion-capture sequences.

Publication pageAn Objective Deghosting Quality Metric for HDR Images

Reconstructing high dynamic range (HDR) images of a complex scene involving moving objects and dynamic backgrounds isprone to artifacts. A large number of methods have been proposed that attempt to alleviate these artifacts, known as HDRdeghosting algorithms. Currently, the quality of these algorithms are judged by subjective evaluations, which are tedious toconduct and get quickly outdated as new algorithms are proposed on a rapid basis. In this paper, we propose an objectivemetric which aims to simplify this process. Our metric takes a stack of input exposures and the deghosting result and producesa set of artifact maps for different types of artifacts. These artifact maps can be combined to yield a single quality score. Weperformed a subjective experiment involving52subjects and16different scenes to validate the agreement of our quality scoreswith subjective judgements and observed a concordance of almost 80%. Our metric also enables a novel application that wecall as hybrid deghosting, in which the output of different deghosting algorithms are combined to obtain a superior deghostingresult.

Publication page

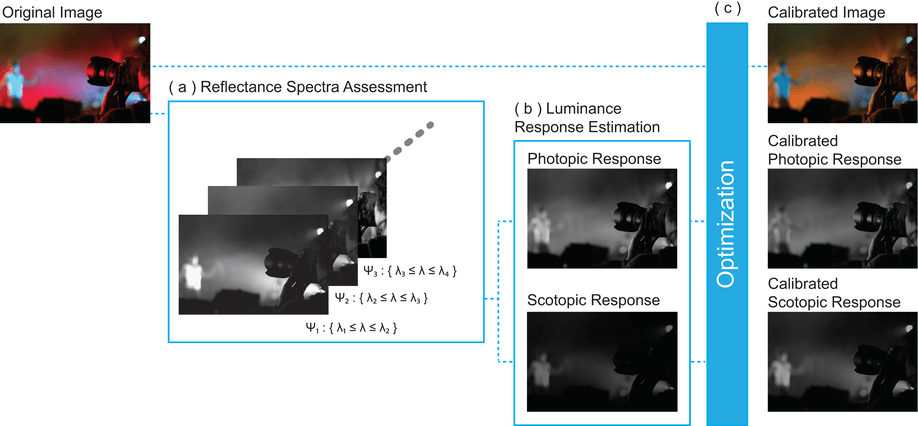

Image Reproduction with Compensation of Luminance Adaptation

We introduce an image reproduction model that retargets colors for printing purposes to ensure similar luminance perception under photopic and scotopic vision. Our model is based on the physiological functioning of the rod and cone cells in the retina in varying lighting conditions, so that the human visual system exhibits responses akin to a printed output of the model for different illumination levels. Prior to retargeting, digital color images are converted to spectral representations and their photopic and scotopic luminance responses are obtained. The color retargeting is realized by optimizing our compensation function over the color space. In addition, we present a spatially varying operator to enhance the color coherence over salient regions. Reproduction results demonstrate substantially decreased difference between the two luminance responses. Further, it is validated through psychophysical evaluation that our model on average provides superior recognition rates in dark environments, while keeping the noticeable differences in aesthetic appeal acceptable in well-lit environments.

Publication page

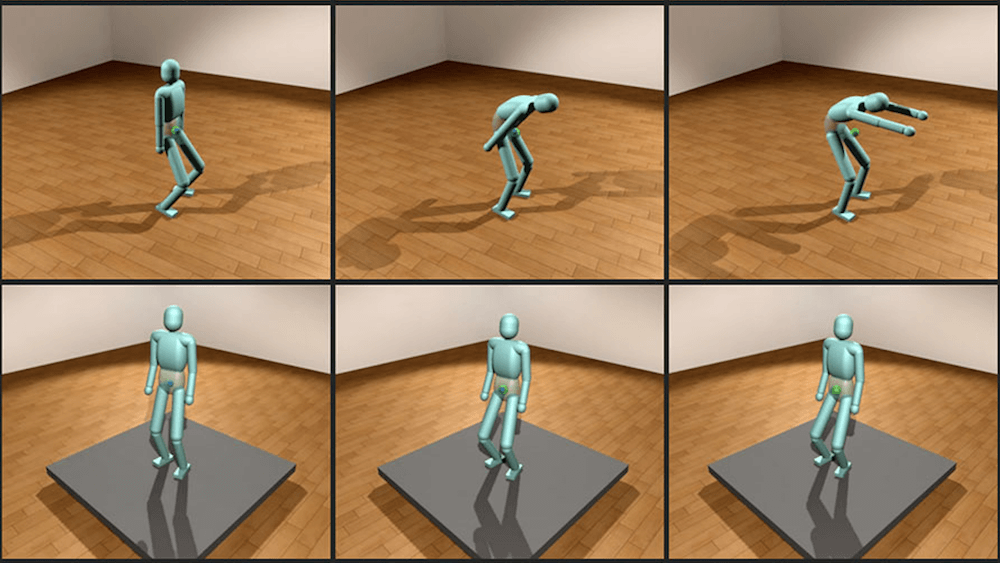

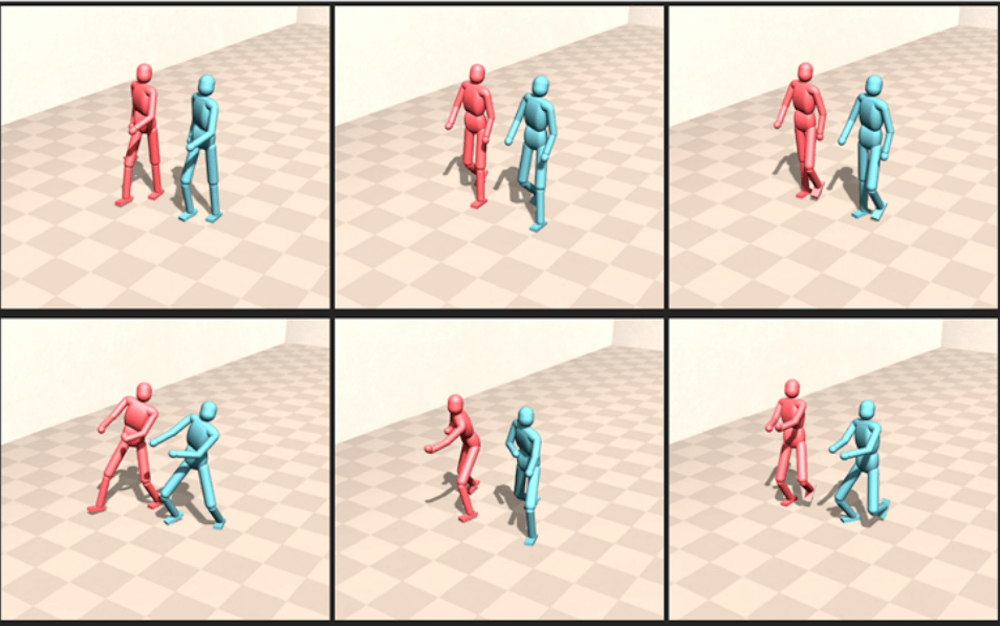

Robust Standing Control with Posture Optimization

Humans need to shift their center of mass during standing for several purposes such as preparing for the upcoming motion or increasing their stability. In this paper, we present a control strategy for robust center of mass shifting motions during standing. In our strategy, the desired posture can be defined with only a few high level features, such as the desired character center of mass position and the foot configurations. An online optimization process is designed for generating a kinematic lower body and pelvis posture that satisfies these high level features together with some criteria that guide a natural standing pose. Natural knee bending behaviours automatically arise as a result of this optimization process. Internal joint torques for tracking this optimized posture together with the given desired upper body pose are calculated by the physics-based control framework. Moreover, a physics-based arm control strategy that regulates the angular momentum of the character is devised in order to increase the robustness of the character under external disturbances. Several experiments are conducted to demonstrate the effectiveness of the proposed strategy. Because the strategy does not include any off-line parameter optimization, equations of motion, or inverse dynamics, it is highly suitable for online applications.

Publication page

Style-based Biped Walking Control

We present a control approach for synthesizing physics-based walking motions that mimic the style of a given reference walking motion. Style transfer between the reference motion and its physically simulated counterpart is achieved via extracted high-level features like the trajectory of the swing ankle and the twist of the swing leg during stepping. The physically simulated motion is also capable of tracking the intra-step variations of the sagittal character center of mass velocity of the reference walking motion. This is achieved by an adaptive velocity control strategy which is fed by a gain–deviation relation curve learned offline. This curve is learned from a number of training walking motions once and is used for velocity control of other reference walking motions. The control approach is tested with motion capture data of several walking motions of different styles. The approach also enables generating various styles manually or by varying the high-level features of an existing motion capture data. The demonstrations show that the proposed control framework is capable of synthesizing robust motions which mimic the desired style regardless of the changing environment or character proportions.

Publication page

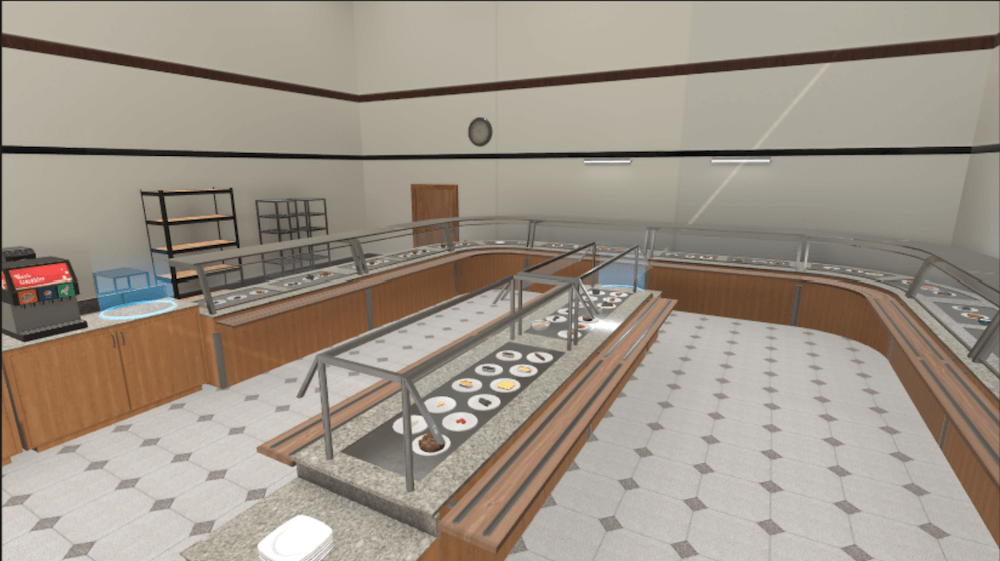

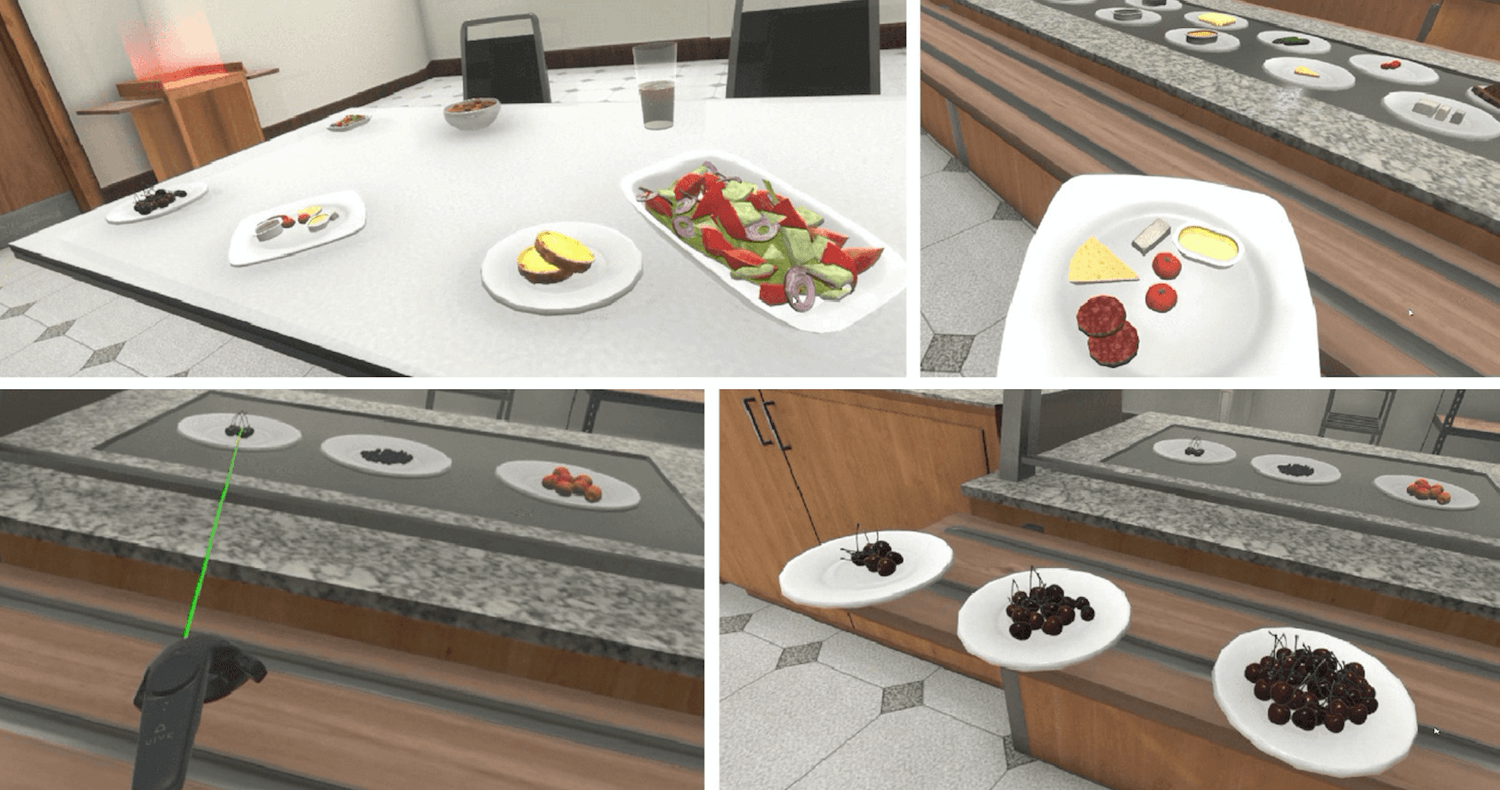

The Virtual Cafeteria: An Immersive Environment for Interactive Food Portion-Size Education

This work introduces the Virtual Cafeteria, a novel virtual reality simulation of a buffet-style cafeteria for use in food portion-size education of adolescents as an alternative to established approaches such as verbal education and education with fake food replicas. As user behavior is automatically recorded to the millisecond, the application also serves as a tool for assessment of food portion-size perception. The Virtual Cafeteria offers a wide range of energy and nutrient densities in 64 food and 7 beverage options. Addressing the limitations and shortcomings of the immersive environments used in previous studies on food portion-size, the Virtual Cafeteria features not only a considerably wider variety of food but also multisensory feedback coupled with a high level of interaction and photorealism, and imperceptible latency.

Publication page

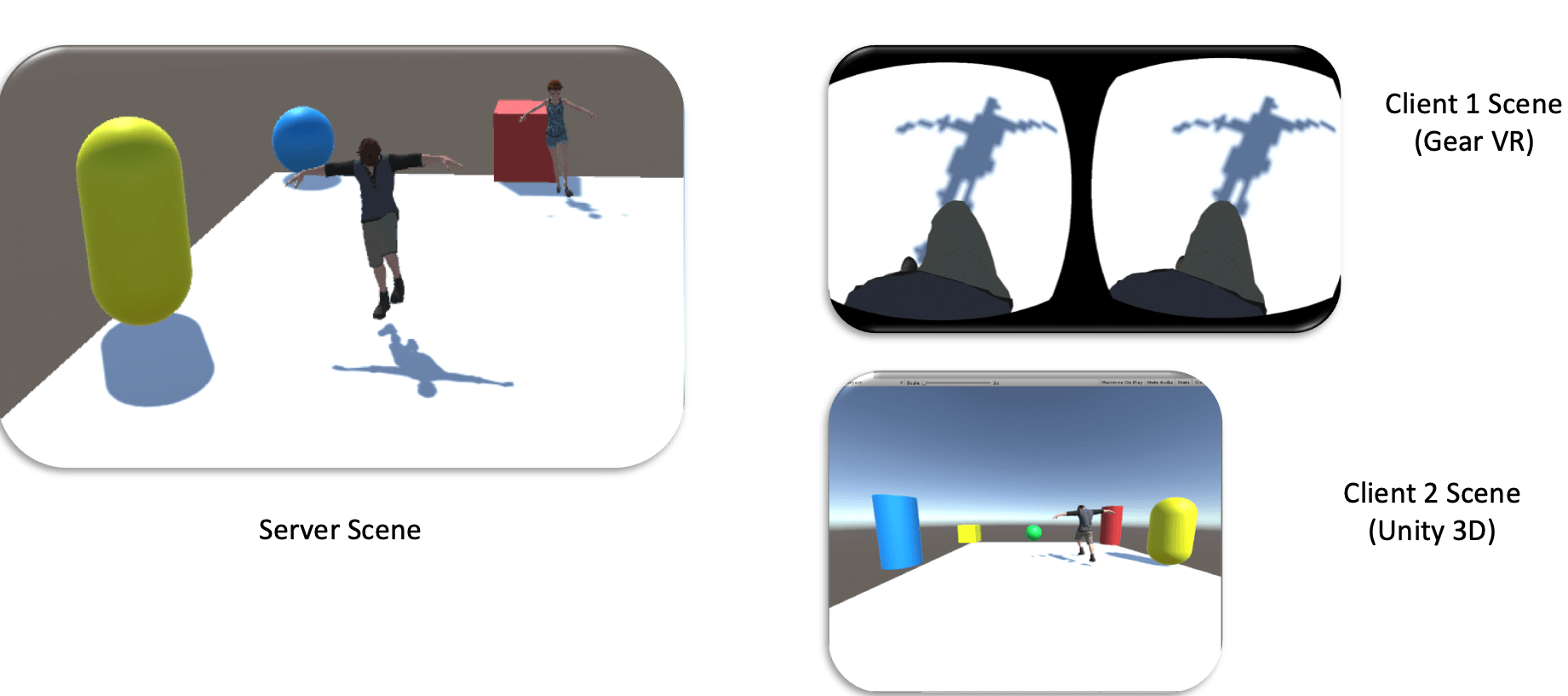

Collaborative Virtual Environments with Virtual Reality and Motion Capture Systems

Virtual Reality for Anxiety Disorders

Virtual reality is a relatively new exposure tool that uses three-dimensional computer-graphics- based technologies which allow the individual to feel as if they are physically inside the virtual environment by misleading their senses. As virtual reality studies have become popular in the field of clinical psychology in recent years, it has been observed that virtual-reality-based therapies have a wide range of application areas, especially on anxiety disorders. Studies indicate that virtual reality can be more realistic than mental imagery and can create a stronger feeling of “presence”; that it is a safer starting point compared to in vivo exposure; and that it can be applied in a more practical and controlled manner. The aim of this review is to investigate exposure studies based on virtual reality in anxiety disorders (specific phobias, panic disorder and agoraphobias, generalized anxiety disorder, social phobia), posttraumatic stress disorder and obsessive-compulsive disorder.

Publication page

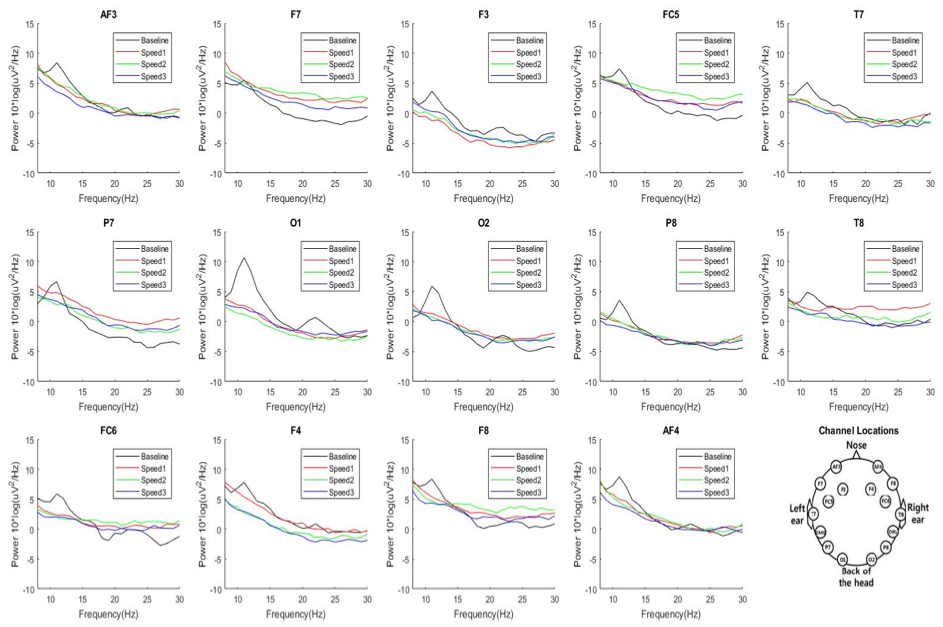

Detection and Mitigation of Cybersickness via EEG-Based Visual Comfort Improvement

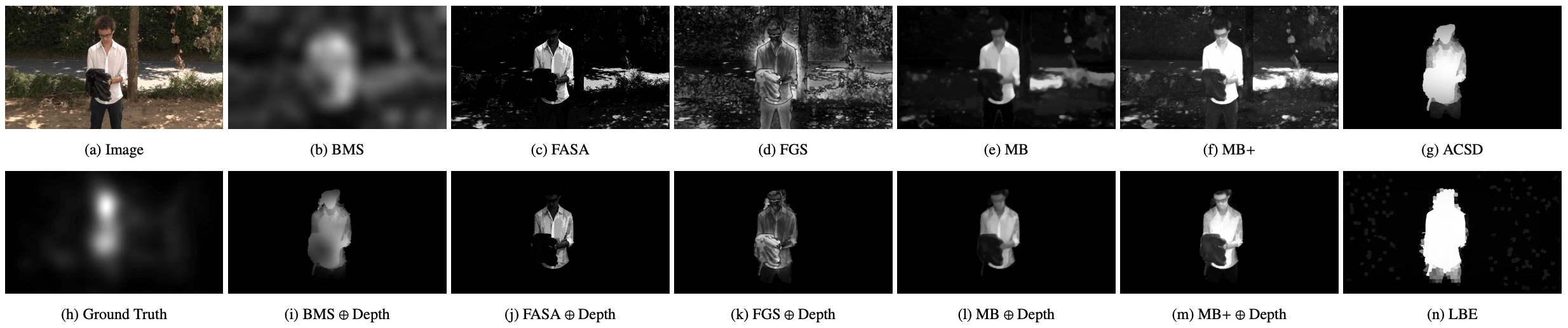

This work explores a set of well-studied visual saliency features through seven saliency prediction methods with the aim of assessing how applicable they are for estimating visual saliency in dynamic virtual reality (VR) environments that are experienced with head-mounted displays. An in-depth analysis of how the saliency methods that make use of depth cues compare to ones that are based on purely image-based (2D) features is presented. To this end, a user study was conducted to collect gaze data from participants as they were shown the same set of three dynamic scenes in 2D desktop viewing and 3D VR viewing using a head-mounted display. The scenes convey varying visual experiences in terms of contents and range of depth-of-field so that an extensive analysis encompassing a comprehensive array of viewing behaviors could be provided. The results indicate that 2D features matter as much as depth for both viewing conditions, yet depth cue is slightly more important for 3D VR viewing. Furthermore, including depth as an additional cue to the 2D saliency methods improves prediction for both viewing conditions, and the benefit margin is greater in 3D VR viewing.

Publication page

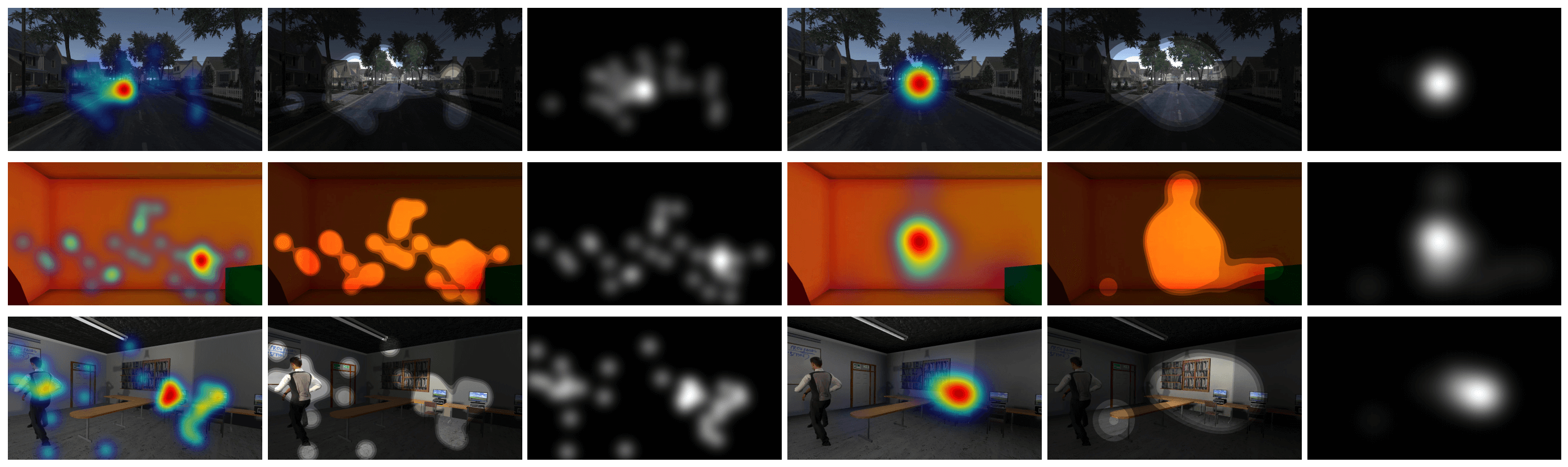

Visual Saliency Prediction in Dynamic Virtual Reality Environments Experienced with Head-Mounted Displays: An Exploratory Study

This work explores a set of well-studied visual saliency features through seven saliency prediction methods with the aim of assessing how applicable they are for estimating visual saliency in dynamic virtual reality (VR) environments that are experienced with head-mounted displays. An in-depth analysis of how the saliency methods that make use of depth cues compare to ones that are based on purely image-based (2D) features is presented. To this end, a user study was conducted to collect gaze data from participants as they were shown the same set of three dynamic scenes in 2D desktop viewing and 3D VR viewing using a head-mounted display. The scenes convey varying visual experiences in terms of contents and range of depth-of-field so that an extensive analysis encompassing a comprehensive array of viewing behaviors could be provided. The results indicate that 2D features matter as much as depth for both viewing conditions, yet depth cue is slightly more important for 3D VR viewing. Furthermore, including depth as an additional cue to the 2D saliency methods improves prediction for both viewing conditions, and the benefit margin is greater in 3D VR viewing.

Publication page

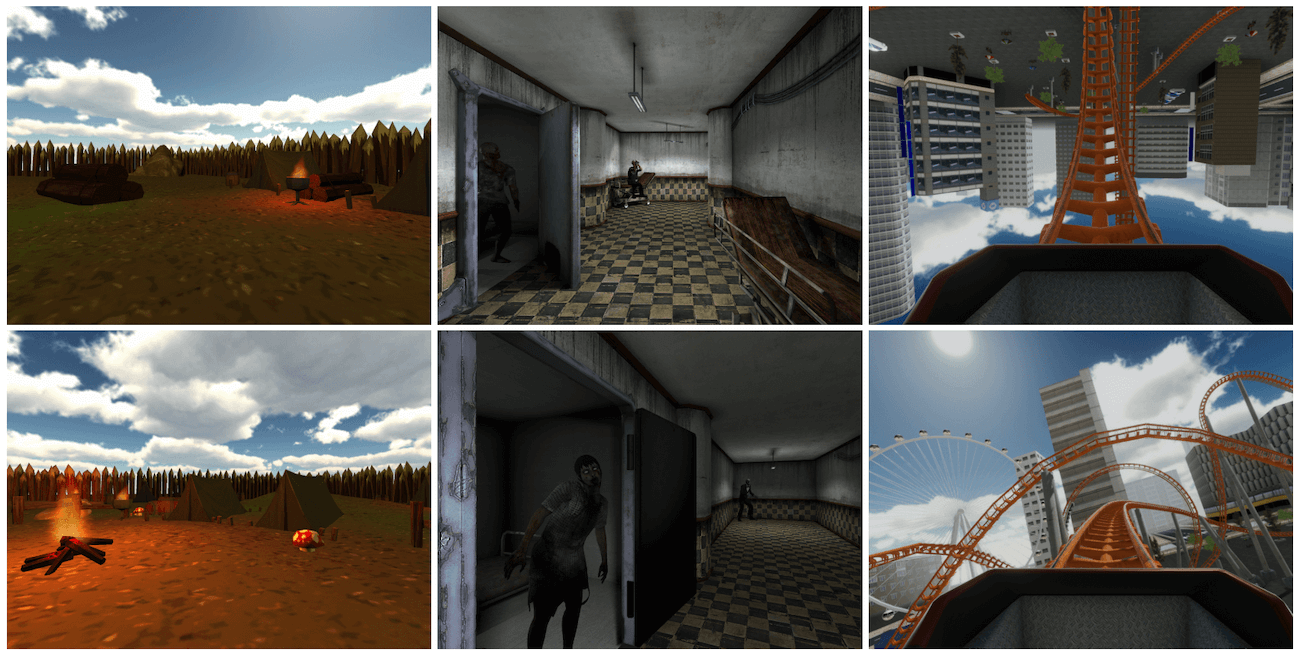

A Comprehensive Study of the Affective and Physiological Responses Induced by Dynamic Virtual Reality Environments

Previous studies showed that virtual reality (VR) environments can affect emotional state and cause significant changes in physiological responses. Aside from these effects, inadvertently induced cybersickness is a notorious problem faced in VR. In this study, to further investigate the effects of virtual environments (VEs) with different context, three dynamic VEs were created. Each VE had a particular purpose: evoking no emotion in Campfire (CF), unpleasant emotions in Hospital (HH), and cybersickness symptoms in Roller Coaster (RC). We made use of objective measurements of physiological responses such as pupil dilation, blinks, fixations, saccades, and heart rate, as well as subjective self‐assessments via pre‐ and post‐VE session questionnaires. While previous studies investigate different subsets of these measures, our study makes a comprehensive analysis of them jointly in dynamic VEs. The results of the study indicate that cybersickness produced higher saccade mean speed, whereas unpleasant context caused higher fixation count, saccade rate, and pupil dilation. Moreover, CF decreased anxiety, whereas HH and RC increased it and they also decreased comfort. Participants felt cybersickness in all VEs even in CF which is designed to minimize the effects.

Publication page

The use of virtual reality (VR) exposure for reducing contamination fear and disgust: Can VR be an effective alternative exposure technique to in vivo?

This work explores a set of well-studied visual saliency features through seven saliency prediction methods with the aim of assessing how applicable they are for estimating visual saliency in dynamic virtual reality (VR) environments that are experienced with head-mounted displays. An in-depth analysis of how the saliency methods that make use of depth cues compare to ones that are based on purely image-based (2D) features is presented. To this end, a user study was conducted to collect gaze data from participants as they were shown the same set of three dynamic scenes in 2D desktop viewing and 3D VR viewing using a head-mounted display. The scenes convey varying visual experiences in terms of contents and range of depth-of-field so that an extensive analysis encompassing a comprehensive array of viewing behaviors could be provided. The results indicate that 2D features matter as much as depth for both viewing conditions, yet depth cue is slightly more important for 3D VR viewing. Furthermore, including depth as an additional cue to the 2D saliency methods improves prediction for both viewing conditions, and the benefit margin is greater in 3D VR viewing.

Publication page

Deep into Visual Saliency for Immersive VR Environments Rendered in Real-Time

As virtual reality (VR) headsets with head-mounted-displays (HMDs) are becoming more and more prevalent, new research questions are arising. One of the emergent questions is how best to employ visual saliency prediction in VR applications using current line of advanced HMDs. Due to the complex nature of human visual attention mechanism, the problem needs to be investigated from different points of view using different approaches. Having such an outlook, this work extends the previous effort on exploring a set of well-studied visual saliency cues and saliency prediction methods making use of these cues with the aim of assessing how applicable they are for estimating visual saliency in immersive VR environments that are rendered in real-time and experienced with consumer HMDs. To that end, a new user study was conducted with a larger sample and reveals the effects of experiencing dynamic computer-generated scenes with reduced navigation speeds on visual saliency. Using these scenes that have varying visual experiences in terms of contents and range of depth-of-field, the study also compares VR viewing to 2D desktop viewing with an expanded set of results. The presented evaluation offers the most in-depth view of visual saliency in immersive, real-time rendered VR to date. The analysis encompassing the results of both studies indicate that decreasing navigation speed reduces the contribution of depth-cue to visual saliency and has a boosting effect for cues based on 2D image features only. While there are content-dependent variances among their scores, it is seen that the saliency prediction methods based on boundary connectivity and surroundedness work best in general for the given settings.

Publication page

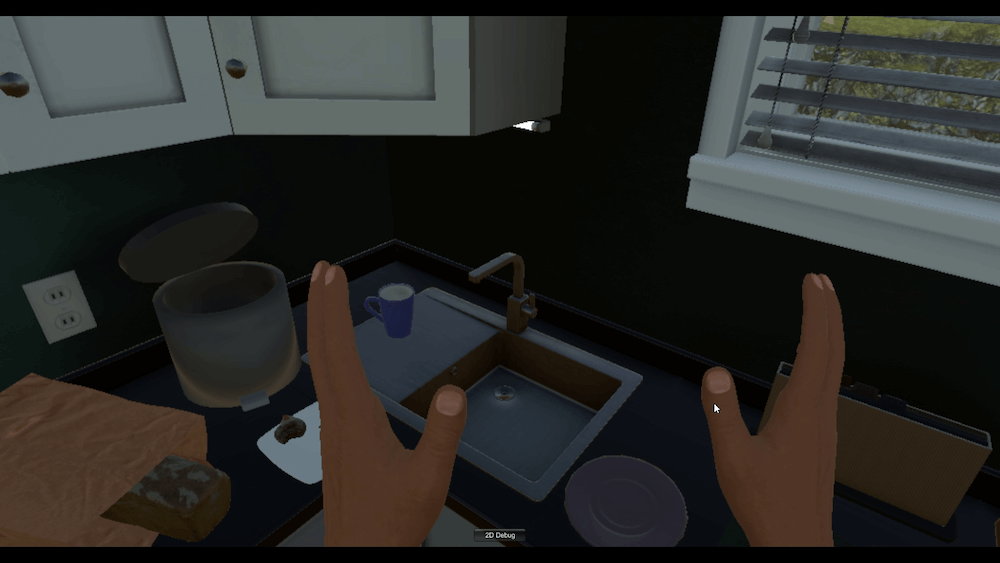

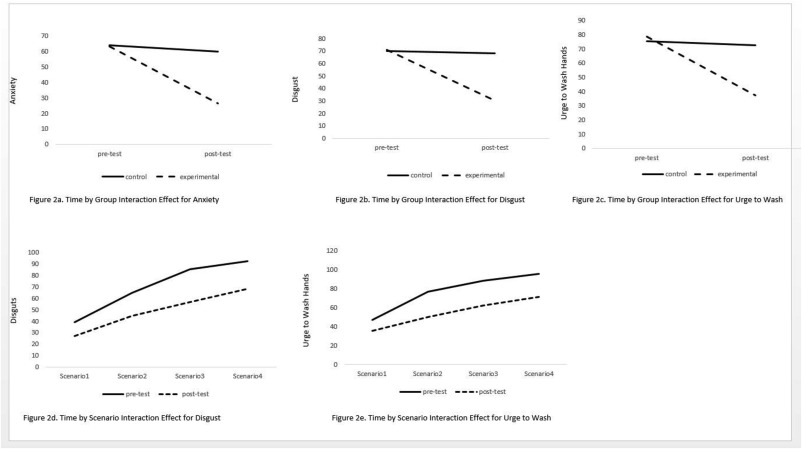

Assessment of virtual reality as an anxiety and disgust provoking tool: The use of VR exposure in individuals with high contamination fear

Preliminary studies have provided promising results on the feasibility of virtual reality (VR) interventions for Obsessive-Compulsive Disorder. The present study investigated whether VR scenarios that were developed for contamination concerns evoke anxiety, disgust, and the urge to wash in individuals with high (n = 33) and low (n = 33) contamination fear. In addition, the feasibility of VR exposure in inducing disgust was examined through testing the mediator role of disgust in the relationship between contamination anxiety and the urge to wash. Participants were immersed in virtual scenarios with varying degrees of dirtiness and rated their level of anxiety, disgust, and the urge to wash after performing the virtual tasks. Data were collected between September and December 2019. The participants with high contamination fear reported higher contamination-related ratings than those with low contamination fear. The significant main effect of dirtiness indicated that anxiety and disgust levels increased with increasing overall dirtiness of the virtual scenarios in both high and low contamination fear groups. Moreover, disgust elicited by VR mediated the relationship between contamination fear and the urge to wash. The findings demonstrated the feasibility of VR in eliciting emotional responses that are necessary for conducting exposure in individuals with high contamination fear. In conclusion, VR can be used as an alternative exposure tool in the treatment of contamination-based OCD.

Publication page

Usability Study of a Novel Tool: The Virtual Cafeteria in Nutrition Education

Objective: This study aimed to evaluate the usability of the virtual cafeteria (VC) and determine its suitability for further studies in portion size education and rehabilitation of nutrition.

Methods: The study was conducted with 73 participants (aged 18–40 years). The VC, where the participants performed the task of assembling a meal, was created as a virtual reality simulation of a buffet-style cafeteria (94 food and 10 beverage items). The participants were asked to complete the System Usability Scale, which regards ≥70 points as acceptable, and to give comments about the VC.

Results: The mean System Usability Scale score was 79.4 ± 12.71 (range, 22.2–97.2). Approximately 68% of the participants described positive qualities for the VC. The participants with technical background reported the VC as more usable (96%) than the others (74%) (χ2 = 5,378; df = 1, P = 0.025).

Conclusions and Implications: Offered as a novel tool for education and rehabilitation of nutrition, the VC was confirmed to feature good usability.

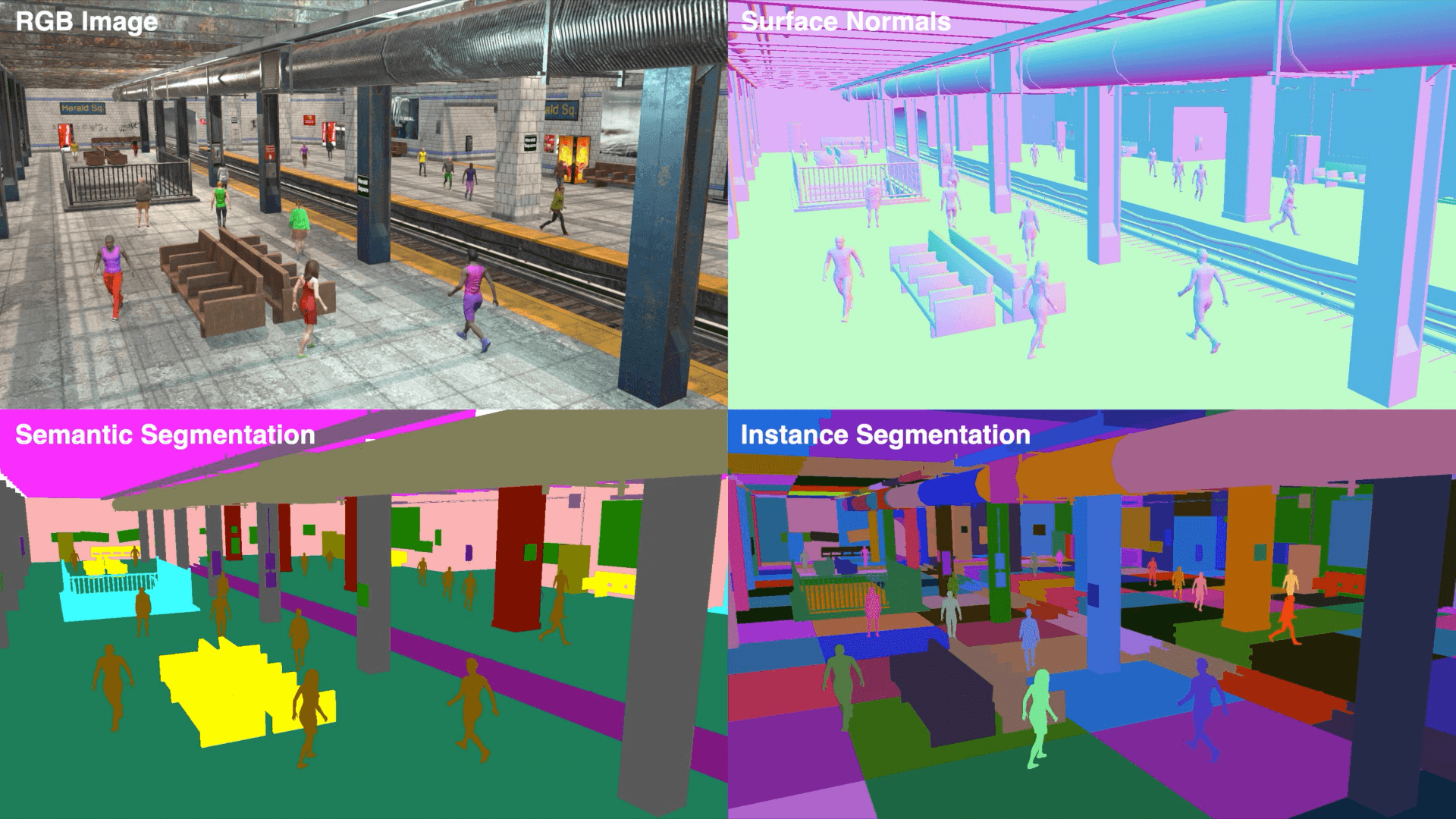

NOVA: Rendering Virtual Worlds with Humans

Today, the cutting edge of computer vision research greatly depends on the availability of large datasets, which are critical for effectively training and testing new methods. Manually annotating visual data, however, is not only a labor-intensive process but also prone to errors. In this study, we present NOVA, a versatile framework to create realistic-looking 3D rendered worlds containing procedurally generated humans with rich pixel-level ground truth annotations. NOVA can simulate various environmental factors such as weather conditions or different times of day, and bring an exceptionally diverse set of humans to life, each having a distinct body shape, gender and age. To demonstrate NOVA’s capabilities, we generate two synthetic datasets for person tracking. The first one includes 108 sequences, each with different levels of difficulty like tracking in crowded scenes or at nighttime and aims for testing the limits of current state-of-the-art trackers. A second dataset of 97 sequences with normal weather conditions is used to show how our synthetic sequences can be utilized to train and boost the performance of deep-learning based trackers. Our results indicate that the synthetic data generated by NOVA represents a good proxy of the real-world and can be exploited for computer vision tasks.

NOVA Website

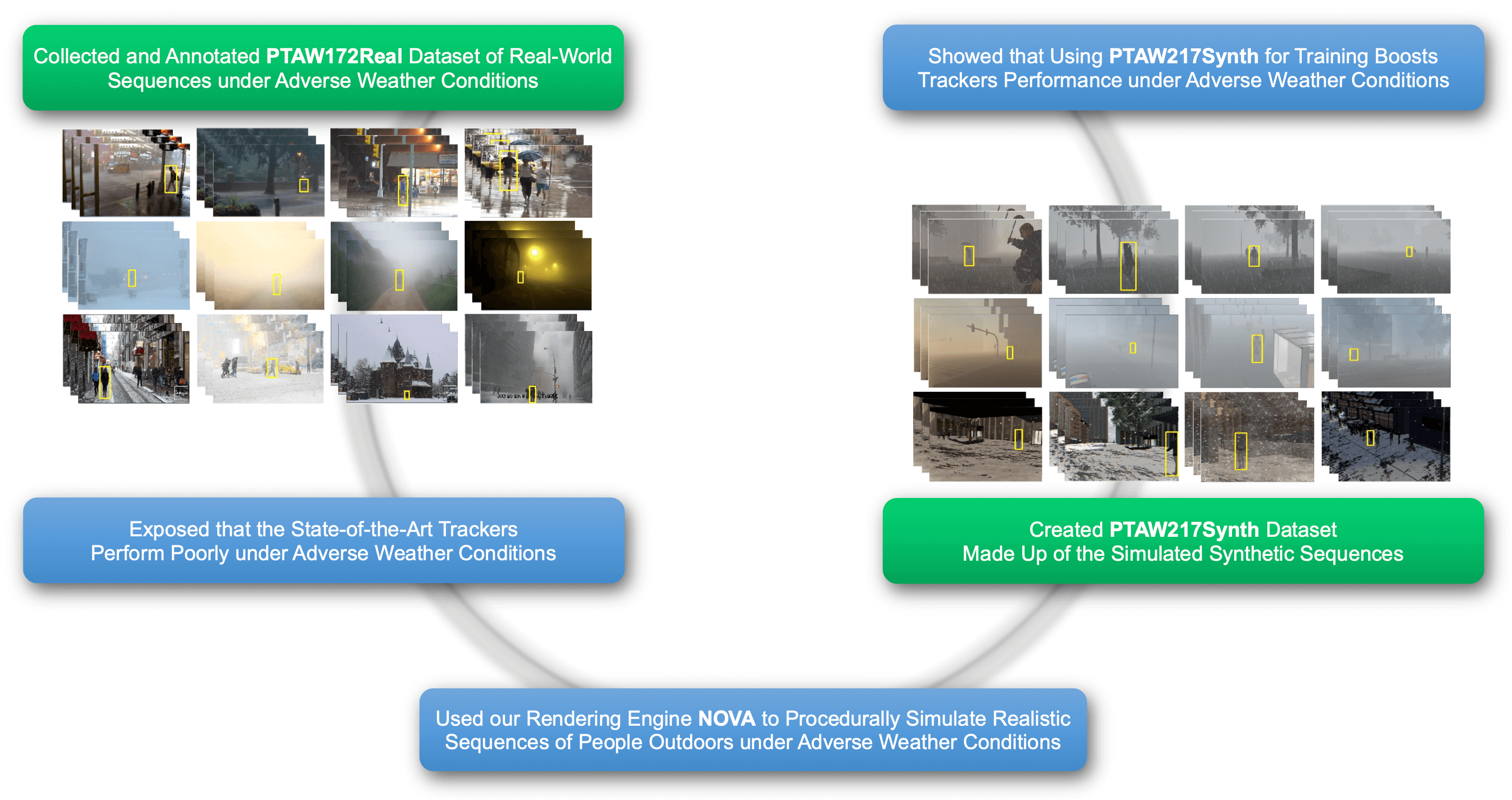

Using Synthetic Data for Person Tracking Under Adverse Weather Conditions

Robust visual tracking plays a vital role in many areas such as autonomous cars, surveillance and robotics. Recent trackers were shown to achieve adequate results under normal tracking scenarios with clear weather condition, standard camera setups and lighting conditions. Yet, the performance of these trackers, whether they are correlation filter-based or learning-based, degrade under adverse weather conditions. The lack of videos with such weather conditions, in the available visual object tracking datasets, is the prime issue behind the low performance of the learning-based tracking algorithms. In this work, we provide a new person tracking dataset of real-world sequences (PTAW172Real) captured under foggy, rainy and snowy weather conditions to assess the performance of the current trackers. We also introduce a novel person tracking dataset of synthetic sequences (PTAW217Synth) procedurally generated by our NOVA framework spanning the same weather conditions in varying severity to mitigate the problem of data scarcity. Our experimental results demonstrate that the performances of the state-of-the-art deep trackers under adverse weather conditions can be boosted when the available real training sequences are complemented with our synthetically generated dataset during training.

Paper Website

Synthetic18K: Learning Better Representations for Person Re-ID and Attribute Recognition from 1.4 Million Synthetic Images

Learning robust representations is critical for the success of person re-identification and attribute recognition systems. However, to achieve this, we must use a large dataset of diverse person images as well as annotations of identity labels and/or a set of different attributes. Apart from the obvious concerns about privacy issues, the manual annotation process is both time consuming and too costly. In this paper, we instead propose to use synthetic person images for addressing these difficulties. Specifically, we first introduce Synthetic18K, a large-scale dataset of over 1 million computer generated person images of 18K unique identities with relevant attributes. Moreover, we demonstrate that pretraining of simple deep architectures on Synthetic18K for person re-identification and attribute recognition and then fine-tuning on real data leads to significant improvements in prediction performances, giving results better than or comparable to state-of-the-art models.

Paper Website